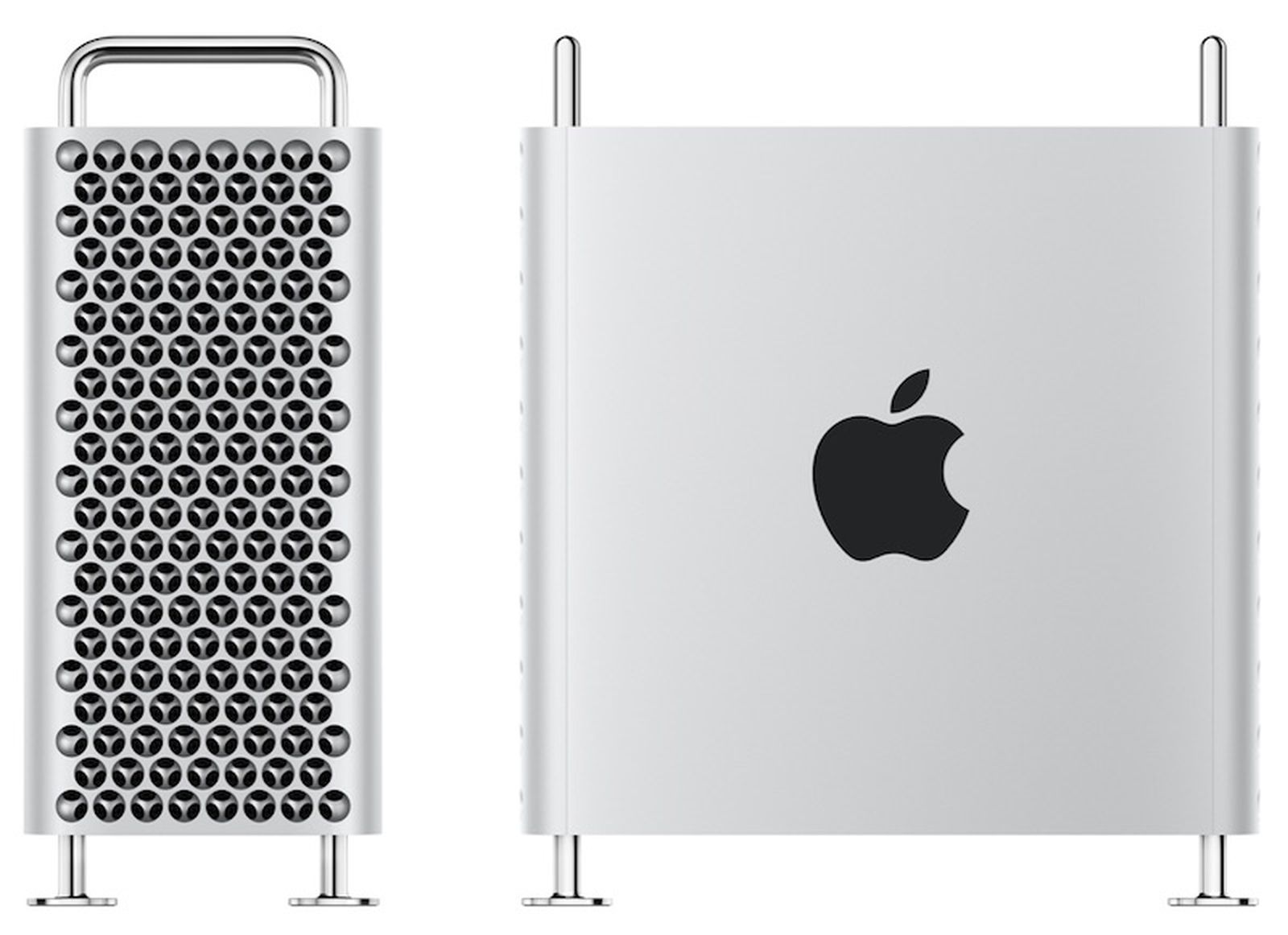

What exactly can you put in a Mac Pro's PCI slot anyway? What can it do that a Studio Ultra can't?

There are a LOT of industrial applications.

Special I/O cards for controlling manufacturing equipment or reading data from same.

When you look at things like scanning electron microscopes, you see big equipment. When you open them up, there are several computers inside, often with multiple components that control different parts of the tool.

Very rarely do you see any of this controlled by USB except the keyboard and mouse. When you need 3nm accuracy, that USB-C doesn't look nearly as sexy as PCIE connections. When ultra precise control of time, again PCIE wins. If you need to control more than 255 parts, PCIE to the rescue. This may seem like a niche to you, but I guarantee you the money is good.

Show up at a vendor with $9 million dollars to ask for something 'pretty good' (not the latest greatest), and, because you are a loyal customer of a major international company, they will deliver it in 'only' 4 years - and that if you put it under contract for 3 years at additional 7 figure costs annually, and you walk away feeling like you just made a steal compared to industry standard.

People who need THAT need PCIE.

I have tools at work with racks of 18 Mac Pros in them. And we weren't the only ones that bought those.