Apple is planning to expand its Communication Safety in Messages feature to the UK, according to The Guardian. Communication Safety in Messages was introduced in the iOS 15.2 update released in December, but the feature has been limited to the United States until now.

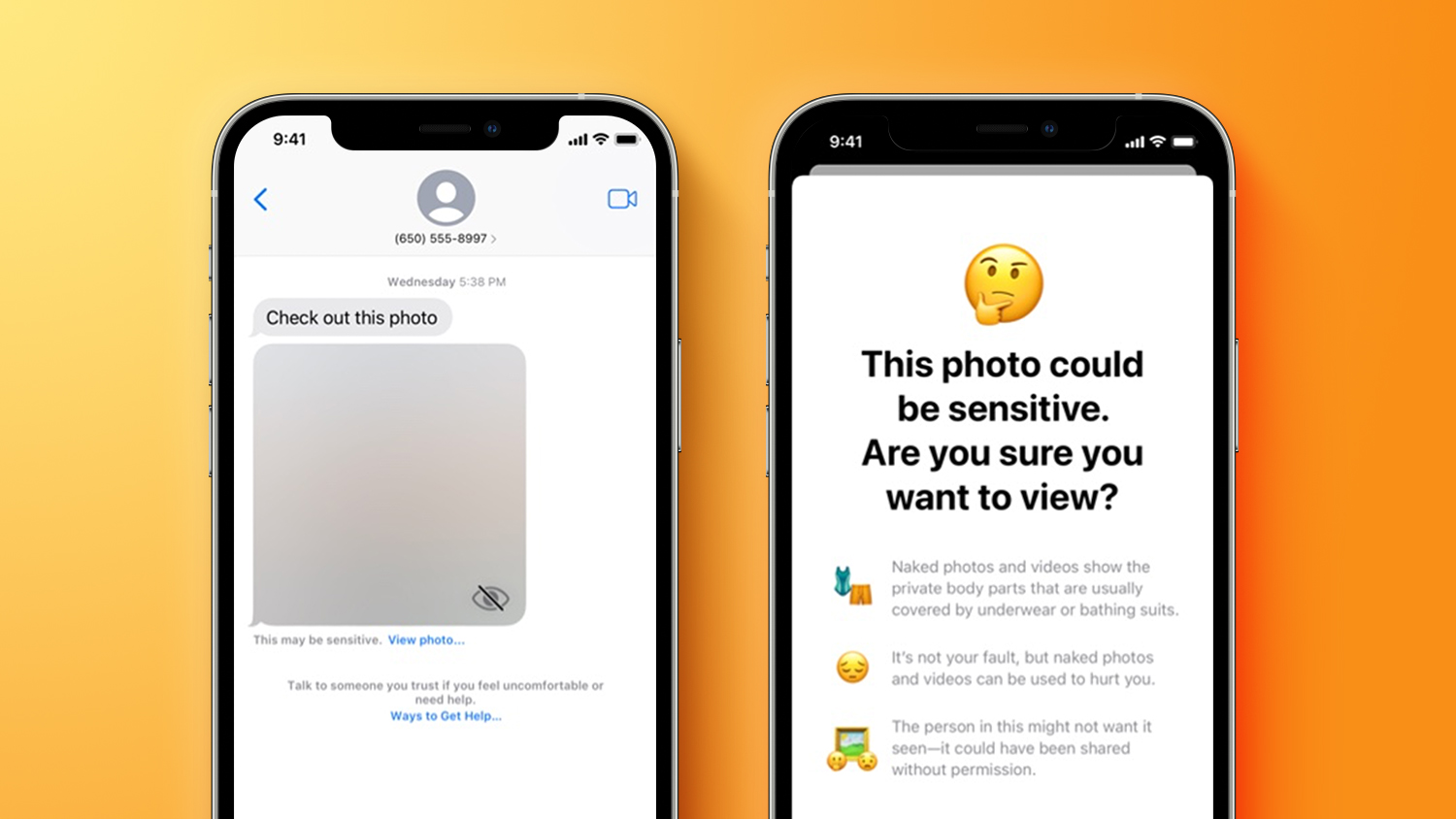

Communication Safety in Messages is designed to scan incoming and outgoing iMessage images on children's devices for nudity and warn them that such photos might be harmful. If nudity is detected in a photo that's received by a child, the photo will be blurred and the child will be provided with resources from child safety groups. Nudity in a photo sent by a child will trigger a warning encouraging the child not to send the image.

Communication Safety is opt-in, privacy-focused, and must be enabled by parents. It is limited to the accounts of children, with detection done on-device, and it is not related to the anti-CSAM functionality that Apple has in development and may release in the future.

We have a full guide on Communication Safety in Messages that walks through exactly how it works, where it's used, Apple's privacy features, and more.

Update: Communication Safety in Messages is also coming to Canada.

Update 2: The feature is also coming to Australia and New Zealand, according to The Verge.

Article Link: Apple's Messages Communication Safety Feature for Kids Expanding to the UK, Canada, Australia, and New Zealand

Last edited: